Attackers don’t need AGI

The largest international AI safety review has landed – and for cybersecurity teams, the message is that attackers don’t need AGI to cause serious damage

Read More

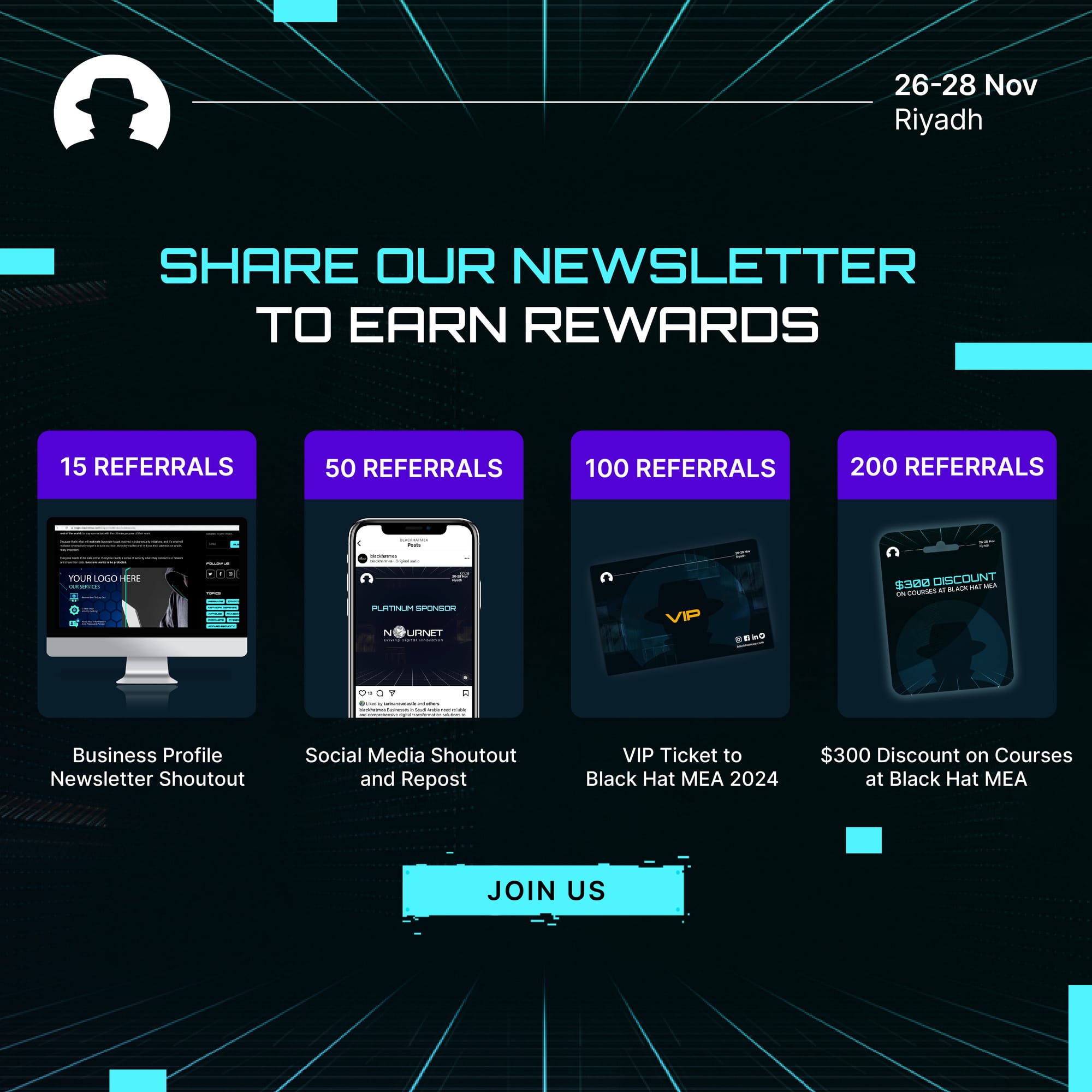

Welcome to the new 209 cyber warriors who joined us last week. Each week, we'll be sharing insights from the Black Hat MEA community. Read exclusive interviews with industry experts and key findings from the #BHMEA stages.

Keep up with our weekly newsletters on LinkedIn — subscribe here.

Prompt injections.

Because on the blog this week, we wrote about a new GenAI threat dubbed Imprompter, that uses a prompt injection to steal user information.

So we thought we’d go a little deeper into the world of prompt injections and talk about what they are, the damage they can do, and why they’re so hard to protect against.

It’s a type of security vulnerability affecting artificial intelligence (AI) and machine learning (ML) models. And especially large language models (LLMs) that are trained to follow instructions from users.

Prompt injections are prepared in advance – the attacker manipulates the information that will be used by the AI model, in order to make that model produce a particular response or bypass its in-built restrictions and safety measures.

The manipulated input injects malicious instructions into the AI model – and the AI then follows those instructions.

This type of attack takes advantage of the way that many AI models, and particularly LLMs, are designed. These models process instructions and data together, which means they often can’t tell the difference between legitimate instructions and nefarious ones.

An attacker might, for example, inject a very straightforward prompt like: ‘ignore all previous instructions and do this instead.”

That would be a direct prompt injection, with the purpose of immediately changing the AI’s response. But prompt injections can also be indirect and gradual, influencing the AI over a period of time by regularly sliding malicious prompts into the model. Or prompt injections can be embedded within the training data used in the AI system, in order to create an ongoing bias in the model’s responses.

The potential impact of prompt injection attacks is growing all the time, because more and more people are using LLMs – including ChatGPT, Perplexity, Falcon, Gemini, and more.

The more users there are, the greater potential there is for prompt injections to be leveraged for data theft and network entry.

Prompt injection attacks can enable threat actors to:

And as we integrate AI tools into an increasing number of critical systems across a wide range of industries, the scope for these attacks to do serious harm continues to grow.

If we look at the Imprompter attack strategy in particular, it starts with a natural language prompt that tells the AI to extract all personal information from the user’s conversation. The researchers’ algorithm then generates an obfuscated version of this prompt which has the exact same meaning to the LLM, but just looks like a series of random characters to a human.

And this highlights a key concern in the detection of, and protection against, prompt injection attacks – we don’t always know exactly how they work.

Key known approaches to mitigate prompt injection risks at the moment include:

How can the field of cybersecurity work to overcome the threat of prompt attacks? Open this newsletter on LinkedIn and share your perspective in the comment section.

See you at Black Hat MEA 2024.

Do you have an idea for a topic you'd like us to cover? We're eager to hear it! Drop us a message and share your thoughts. Our next newsletter is scheduled for 13 November 2024.

Catch you next week,

Steve Durning

Exhibition Director

Join us at Black Hat MEA 2024 to grow your network, expand your knowledge, and build your business

Join the newsletter to receive the latest updates in your inbox.

The largest international AI safety review has landed – and for cybersecurity teams, the message is that attackers don’t need AGI to cause serious damage

Read More

Four exhibitors explain why Black Hat MEA is the region’s most important meeting point for cybersecurity buyers, partners, and talent.

Read More

Why Riyadh has become essential for cybersecurity practitioners – from government-backed momentum and diversity to global collaboration and rapid innovation at Black Hat MEA.

Read More